By Kendra Little on January 17, 2024

I’ve written a bit about SQL Server’s Automatic Plan Correction feature before– I have an hour long free course with demos on Automatic Plan Correction here on the site.

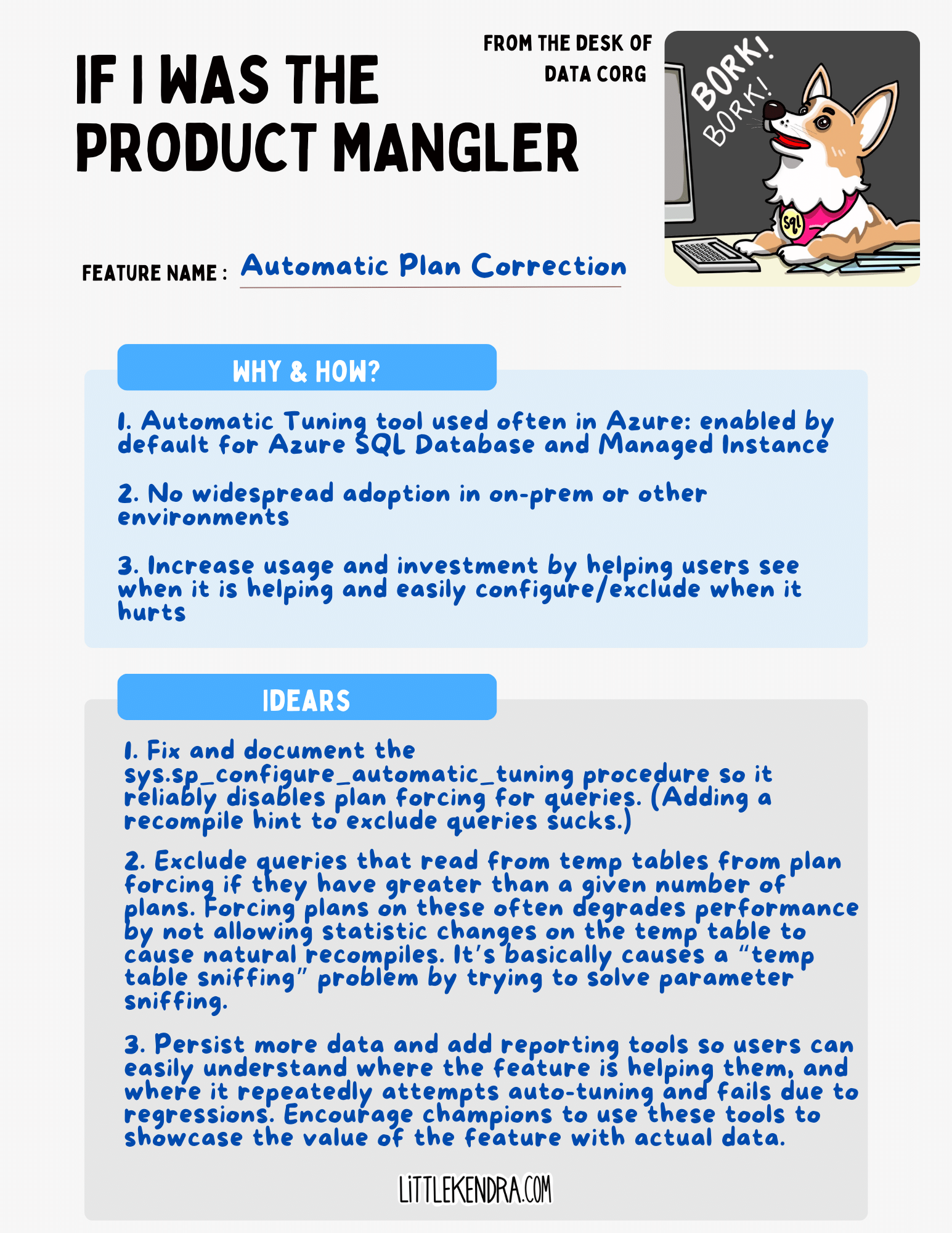

Today I’m updating that course with a note: after using Automatic Plan Correction in anger for a good amount of time, I do not recommend enabling the feature. I’ve had it cause too many performance problems, and there are not a ton of options for an administrator when it’s causing those problems.

Meanwhile, becoming reliant on the feature for the places where it does help makes it difficult to disable the feature. You end up stuck with a very weird set of problems that are oddly similar to the problems the feature was designed to solve.

Further investment in the feature could solve these problems and make this a great tool for customers. Here’s a run down of what Automatic Plan Correction needs from a user who has suffered from it.

It’s an all or nothing feature because the sys.sp_configure_automatic_tuning procedure was never finished, documented, and supported

Picture it: you’re a DBA or developer who gets an alert that a key part of your application is running too slowly. You dig in and find that there’s a slow query causing the problem.

You think, “maybe it’s parameter sniffing?” You use a tool like sp_BlitzWho to identify that the query is running long, and it even gives you a handy command to bump the query plan out of the shared plan cache so that a new plan might be compiled. You run that command.

Nothing changes. New sessions running the query get the exact same plan, no matter how many times you bump it out of the cache.

You start digging around and you finally find the plan in Query Store. You see that the plan is forced. If you look into sys.query_store_plan, you see that the plan_forcing_type is ‘Auto’.

This means that the automatic plan forcing feature is enabled, and has forced a plan in Query Store in an attempt to help performance. It may be still testing out if the plan is better or not, or it may actually believe that it’s better – you’d have to go query the sys.dm_db_tuning_recommendations table to figure that out. (Better do that fast, or the info may get cleared out– it doesn’t get persisted across restarts/failovers,etc, and it also might be overwritten after statistics change.)

How do you fix it?

Short term: you can manually un-force the plan in Query Store. This will allow a new plan to be generated. The problem may come back, however, because un-forcing a plan doesn’t exclude a query from future automatic tuning cycles. (When will those restart? I donno, I don’t think it’s documented. But it’s not rare.)

Long term: you can add a recompile hint to the query. This will prevent Automatic Plan Correction from picking it up, but the trade off is that you are going to burn more CPU for that query every time it optimizes. If the query runs very frequently, or if you find a problem where a large set of queries has a regression (like I describe below with temp tables), that becomes a pretty expensive option.

I suspect that the original design of the feature meant for users to be able to address this in different ways. Erik Darling noticed that there is a procedure in SQL Server named sys.sp_configure_automatic_tuning which is not documented, and filed an issue asking about it. This procedure looks like one could use it in this way:

EXECUTE sys.sp_configure_automatic_tuning

@option = N'FORCE_LAST_GOOD_PLAN', /*Option*/

@type = N'QUERY', /*Target*/

@type_value = 1, /*query_id from Query Store*/

@option_value = N'OFF'; /*Disable*/

Although Erik notes that he saw this worked, I’ve had more mixed results in testing. It sort of worked– but a new query id for the query I excluded was generated in Query Store and then automatic plan forcing went after THAT. (Clown face emoji.)

This leads me to believe that the feature to manually configure plan forcing per query wasn’t completely finished, and it was cut from scope in order to get the feature shipped. (I am not a Microsoft MVP. I have no special knowledge here. I’m only guessing based on my own experiences as a product manager at various software companies.)

I have found that the feature is badly needed, however, and I think it’s worth the time/investment for Microsoft to get sys.sp_configure_automatic_tuning completed and working.

Some queries are not suitable for repeated experimentation

So, which kinds of queries might you want to exclude from Automatic Plan Correction? I’ve found there are two big groups. One of them could be fixed by Microsoft without fixing sys.sp_configure_automatic_tuning– but this one, not so much.

The first group is queries that can’t afford experimentation. If you run a high performance OLTP system, you are likely going to have some queries that need to always be very fast. The Automatic Plan Correction feature works by experimentation: after you get several hundred runs of a query, if it notices some are slower than others, it will try forcing some of the faster execution plans, then observing if they get slower. If it regresses, it’ll unforce the plan and try another one. (Exactly how many will it try if you have many many plans? I’m donno, I don’t think this is documented. It seems like a lot.)

Even if Automatic Plan Correction finds a plan to force that is nice and fast, it won’t last forever. Statistics updates, failovers, or other situations will cause the experimentation cycle to restart.

This results in uneven performance, and a LOT of confusion whenever code on these mission critical queries is updated, as it can take a while for the plan forcing to kick in. There are two things to help this situation:

- Give users the ability to exclude individual queries without recompile hints via a working

sys.sp_configure_automatic_tuningprocedure. - Give users data that is persisted longer so that they can identify plans that are repeatedly forced, which ones regress, and which ones don’t. These can be either manually forced, or the queries and indexes can be tuned to stabilize performance. Currently, not enough data is persisted over time to make this workable without a huge time investment on the user’s part to regularly persist, groom, and analyze the data. But this ability could make the pain of uneven performance worth it– because it would give information needed to help resolve the issues longer term.

Automatic Plan Correction invented “Temporary Table Sniffing” problems – and they hurt

Another class of queries that you likely want to exclude from Automatic Plan Correction is queries that read from temporary tables.

One of the most common performance tuning techniques used with SQL Server is to break down complex queries into separate, smaller steps, using a temporary table to persist data calculated by the initial query. This helps you not create single massive queries with 50+ joins in them by staging a set of data with the core of your result set, then joining to it to get details in further steps.

Temporary tables are often recommended for this because they support column and index statistics. These statistics help the SQL Server query optimizer understand how much data it is working with, and recompile query plans if the size of the data in the temporary table one one run is significantly larger than the last time it ran.

Automatic Plan Correction breaks this performance tuning technique, in my experience. It will freeze query plans that read from temporary tables and prevent them from recompiling. I’ve seen some truly ridiculously bad performance from this happening.

This is ironic/sad when you start to think about it. Automatic Plan Correction as a feature is designed to help with parameter sniffing problems, where a plan gets cached that may not suit the variations of parameters that are passed in on later executions. But with temporary tables, it basically creates a different temporary table “sniffing” problem: when it freezes plans, it is “sniffing” the plan based on the statistics coming out of the temp table in that iteration of the plan. So it creates a different flavor of the problem it’s designed to solve.

I have code that reproduces this issue with the Stack Overflow database that I’ll publish in a future post– it’s not that hard to show, but this post is big enough.

Two things could solve this:

- Automatic Plan Correction could automatically exclude queries that read from temporary tables when there is a large number of plans (maybe greater than 10 or 15). This high number of plans likely indicates that the temp table is causing natural recompiles that are actually beneficial for performance.

- The

sys.sp_configure_automatic_tuningprocedure could be finished and given options to handle this type of exclusion.

Is it helping you overall? Or is it hurting your performance more? The data doesn’t stick around long enough to find out.

I think Automatic Plan Correction has a lot of the ingredients to make an impressive feature that does a very important thing: save customers money.

If a feature can make it possible for customers to demonstrate that enabling it saves them money, people will start shouting about it. They’ll recommend it to others. It’ll take off.

That’s not happening now. Currently, Automatic Plan Correction is enabled by default in Azure SQL Database and Azure SQL Managed Instance. Some customers disable it. I almost never hear of customers who don’t use Azure SQL who enable it. Part of that is likely that they never hear about it, because nobody is shouting about it. Part of it is probably hesitancy to turn something on without a clear way to understand and show if it is helping or hurting.

To fix this:

- Data from

sys.dm_db_tuning_recommendationsneeds to be able to be persisted. Right now, you can only see data for current recommendations. There is only one row per query_id. What experiments were performed last week? Before the last restart? How many experiments have been performed overall for a given query, and what were the outcomes? Make it possible – ideally simple– for users to figure that out, and you’ve given users the tools to understand if you are saving them money. That’s a huge tool. You’ll get champions, adoption, increased advancement in the feature. This will also lead to greater product stickiness for SQL Server. (Could I rig this up myself as a user with duct tape and stubbornness? Probably. Do I have the time and think it’s worth the business investment for this code to be managed by an individual over time? Nah, it should be part of the feature.) - A variant of this functionality could also exist when the feature is not fully enabled, based on the recommendations it makes alone. Reports could estimate how much savings might be realized if the feature is enabled.

Fun times with automatically forced plans in a failure state which don’t un-force themselves

In my experience, it is not uncommon for automatic tuning to force a query plan which ends up in a “failure” state. You can view this in the force_failure_count and last_force_failure_reason columns of sys.query_store_plan. Generally I see the last_force_failure_reason to be GENERAL_FAILURE.

In theory, this shouldn’t necessarily create a big problem. And in my experience, it doesn’t usually cause a big problem. However, once I have had it cause a VERY weird problem of very extended compilation time for a query. The problem went away when the query plan forced that was in the GENERAL_FAILURE state was removed.

In either case, however, what I’m seeing is that Automatic Tuning is forcing a query plan, and then that plan forcing ends up failing– and Automatic Tuning doesn’t notice and clean that up. It should notice and clean that up.

Automatic Plan Correction has great potential and is worth further investment

Although I don’t recommend using this feature now, I think the issues with this feature are simply due to lack of continued investment and maturing the feature by Microsoft. That’s very fixable.

I do believe that in many cases this feature can solve a lot of gnarly parameter sniffing problems! I wish I could recommend it. It just needs a few more levers to be added and to make it easier for users to analyze and share when it is working well, and if it is actually saving them resources and time.